SelfSupervised Pretraining Improves SelfSupervised Pretraining DeepAI

Self-Supervised Pretraining Improves Self-Supervised Pretraining Supplementary Material Colorado J Reed ∗ 1Xiangyu Yue Ani Nrusimha Sayna Ebrahimi Vivek Vijaykumar3 Richard Mao 1Bo Li Shanghang Zhang1 Devin Guillory1 Sean Metzger 1,2 Kurt Keutzer 1UC Berkeley ,2UCSF 3Georgia Tech ∗equal contribution, correspondence to [email protected] Trevor Darrell1

SelfSupervised Representation Learning Lil'Log

Abstract. Self-supervised pretraining has made few-shot learning possible for many NLP tasks. But the pretraining objectives are not typically adapted specifically for in-context few-shot learning. In this paper, we propose to use self-supervision in an intermediate training stage between pretraining and downstream few-shot usage with the goal.

(HPT) SelfSupervised Pretraining Improves SelfSupervised Pretraining Muyun99's wiki

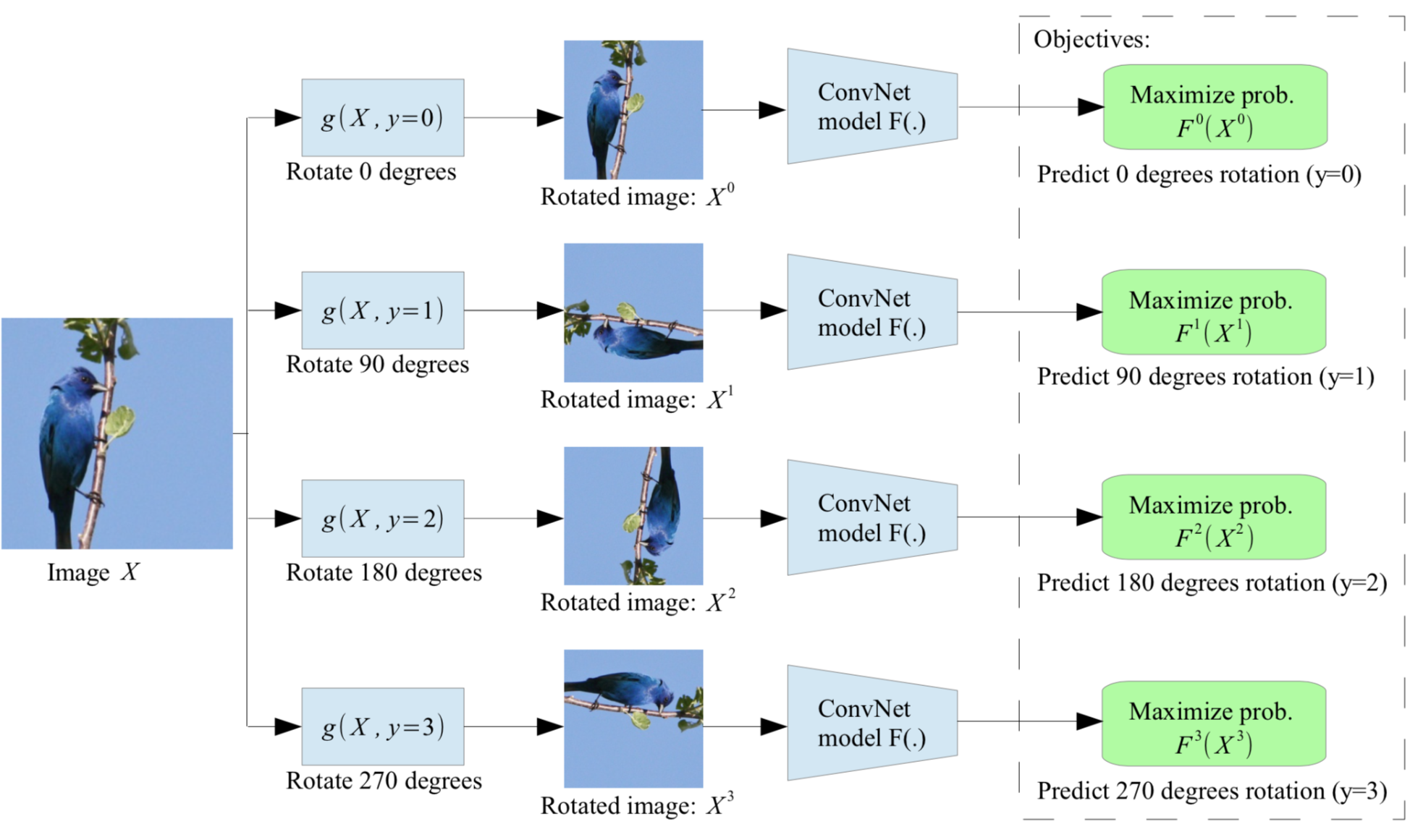

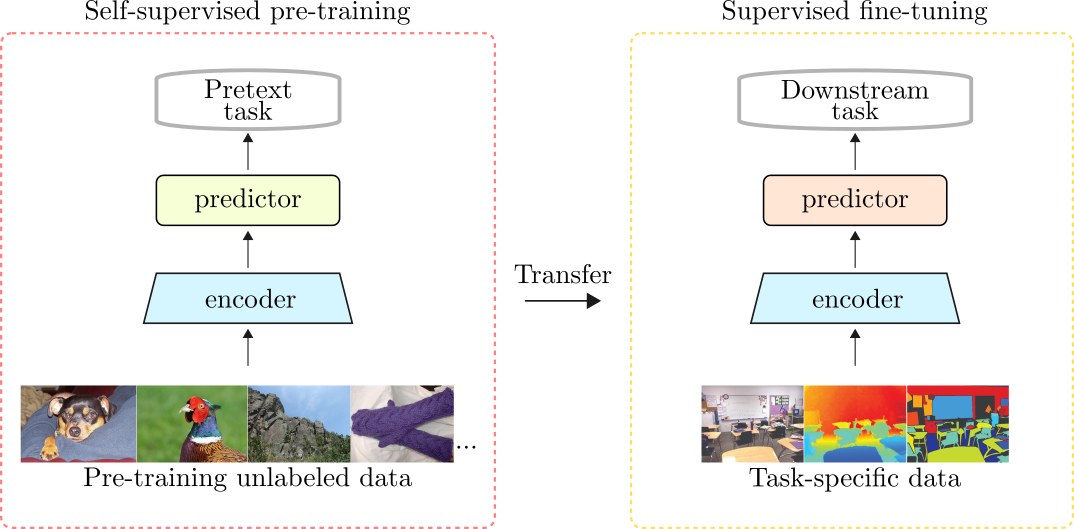

Typically, self-supervised pre- training uses unlabeled source data to pretrain a network that will be transferred to a supervised training process on a target dataset. Self-supervised pretraining is particularly useful when labeling is costly, such as in medical and satel- lite imaging [53, 8].

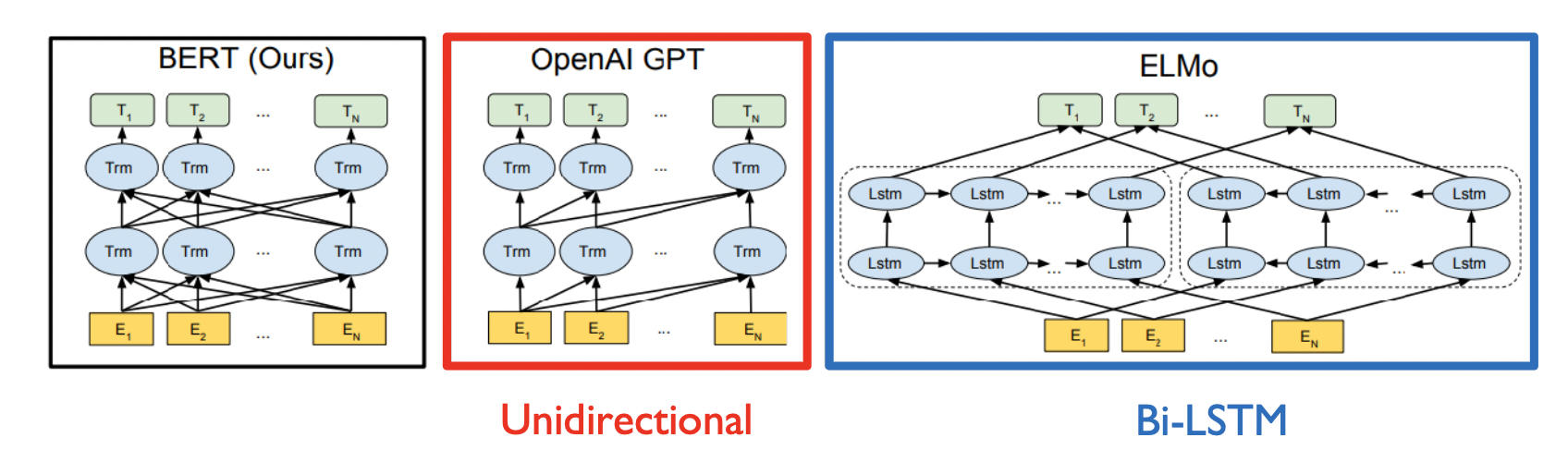

SelfSupervised Pretraining Models (GPT, BERT...)

Self-supervised learning (SSL), emerging as a learning paradigm that can enable training on massive unlabeled data, recently has received considerable attention (Von Kügelgen et al. 2021; Reed et.

(PDF) SelfSupervised Pretraining and Controlled Augmentation Improve Rare Wildlife Recognition

one can expect self-supervision to be useful in practice. We find that leading self-supervised pretraining methods are useful with a small labeling budget, but utility tends to decrease with ample labels. In particular, as the number of labels increases, the most common outcome is Fig. 1 (c), where gains from self-supervised pretrainingtend to di-

Representation Learning Through SelfPrediction Task Optimization Thalles' blog

Abstract. While self-supervised pretraining has pr oven beneficial. for many computer vision tasks, it requires expensive and. lengthy computation, large amounts of data, and is sensitive. to.

(PDF) SelfSupervised Pretraining Improves SelfSupervised Pretraining

To exploit effects of multiple different objectives, we also embed this task into a multi-task setting by adding either a self-supervised classification or regression task. In our experiments, we show that our pretraining improves performance over the ImageNet initialization and reduces the number of epochs until convergence by up to 47%.

(PDF) A Masked SelfSupervised Pretraining Method for Face Parsing

Self-supervised learning is a deep learning approach where models learn from unstructured or unlabeled data without. Citation: Shi C, Wang Y, Wu Y, Chen S, Hu R, Zhang M, Qiu B and Wang X (2023) Self-supervised pretraining improves the performance of classification of task functional magnetic resonance imaging. Front. Neurosci. 17:1199312.

(PDF) Heuristic Attention Representation Learning for SelfSupervised Pretraining

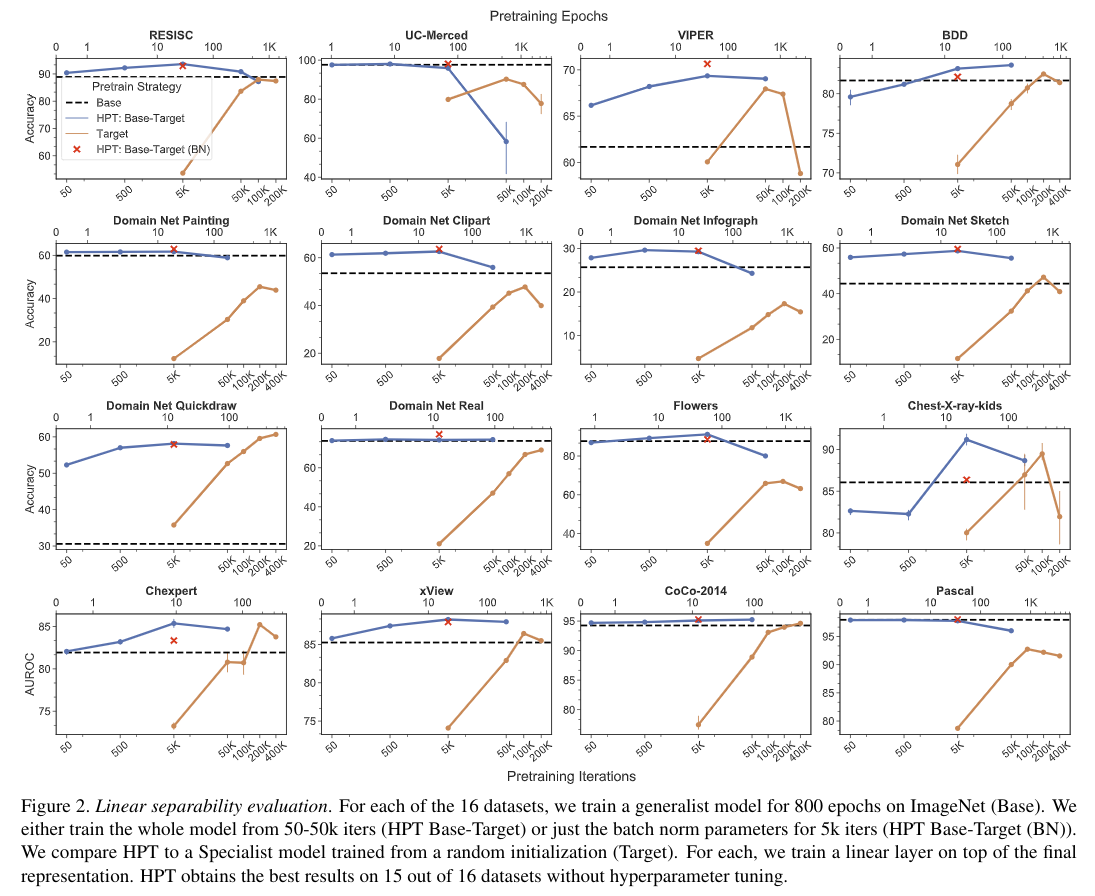

H Hierarchical PreTraining (HPT) is explored, which decreases convergence time and improves accuracy by initializing the pretraining process with an existing pretrained model, and provides a simple framework for obtaining better pretrained representations with less computational resources. While self-supervised pretraining has proven beneficial for many computer vision tasks, it requires.

Selfsupervised Pretraining of Visual Features in the Wild Papers With Code

While self-supervised pretraining has proven beneficial for many computer vision tasks, it requires expensive and lengthy computation, large amounts of data, and is sensitive to data augmentation. Prior work demonstrates that models pretrained on datasets dissimilar to their target data, such as chest X-ray models trained on ImageNet.

GitHub cjrd/selfsupervisedpretraining Repository providing a wide range of selfsupervised

While self-supervised pretraining has proven beneficial for many computer vision tasks, it requires expensive and lengthy computation, large amounts of data, and is sensitive to data augmentation. Prior work demonstrates that models pretrained on datasets dissimilar to their target data, such as chest X-ray models trained on ImageNet, underperform models trained from scratch.

SelfSupervised Learning Definition, Tutorial & Examples

To exploit effects of multiple different objectives, we also embed this task into a multi-task setting by adding either a self-supervised classification or regression task. In our experiments, we show that our pretraining improves performance over the ImageNet initialization and reduces the number of epochs until convergence by up to 47%.

(PDF) SPAKT A Selfsupervised PretrAining method for Knowledge Tracing

Recently, self-supervised pretraining - an unsupervised pretraining method that self-labels data to learn salient feature representations - has outperformed supervised pretraining in an increasing number of computer vision applications [5, 7, 4].These advances come from instance contrastive learning, where a model is trained to identify visually augmented images that originated from the.

Our selfsupervised approach. (a) The task reconstructing images and... Download Scientific

Towards Few-Shot Adaptation of Foundation Models via Multitask Finetuning. Zhuoyan Xu, Zhenmei Shi, Junyi Wei, Fangzhou Mu, Yin Li, Yingyu Liang UW-Madison. SAGA Seminar. Paradigm shift: supervised learning. pre-training + adaptation. Paradigm shift: supervised learning pre-training + adaptation.

SelfSupervised Pretraining for 2D Medical Image Segmentation DeepAI

Self-Supervised Pretraining Improves Self-Supervised Pretraining. While self-supervised pretraining has proven beneficial for many computer vision tasks, it requires expensive and lengthy computation, large amounts of data, and is sensitive to data augmentation. Prior work demonstrates that models pretrained on datasets dissimilar to their.

(PDF) SelfSupervised Pretraining Improves Performance and Inference Efficiency in Multiple Lung

Self-supervised CL based pretraining allows enhanced data representation, therefore, the development of robust and generalized deep learning (DL) models, even with small, labeled datasets.

- Where Can I Buy Crocodile Meat

- Margaret River Cape To Cape

- Interesting Facts About South Africa

- Are Maxxis Tyres Any Good

- Bench With Built In Table

- Reese Witherspoon Weight And Height

- Barry Mcguire Eve Of Destruction Videos

- 1 Nz Dollar To Inr

- House Of The Rising Sun Piano Sheet Music

- How Many Zeros Are In Crore